Kubernetes and Mesos appear to be competing with each other to become the go-to container orchestration platform, although each platform does possess their own strengths. Both offer distinct solutions.

Mesos is more suitable for non-containerized workloads, has a more mature API and smaller footprint; while Kubernetes boasts a wider ecosystem of tools. Plus more professionals list Kubernetes on their resumes!

Mesos vs. Kubernetes

Kubernetes was designed from the ground up to orchestrate Docker containers, managing their deployment and scheduling onto nodes within a compute cluster. Application developers can deploy apps quickly while scaling them according to workload demands – creating an environment which speeds DevOps while decreasing operational complexity.

Mesos is an established platform that can run non-containerized workloads like Apache Hadoop and Kafka on shared infrastructure, while providing a framework that lets you interleave existing and new applications.

Mesos utilizes Marathon as its network runtime to support various networking modes like IP-per-container and bridge mode, removing the need to map container ports to host ports and providing more efficient communication among containers in different nodes. Mesos makes collecting and analyzing cluster metrics effortless through APIs or external tools such as ELK or Prometheus+Grafana that offer detailed information on performance and health metrics of individual components.

Brief Overview of Mesos

Mesos is a distributed kernel developed at the University of California, Berkeley to facilitate resource sharing among workloads in data centers and clouds. Utilizing its flexible master-slave architecture, it offers efficient isolation between applications so they won’t compete for resources. A Mesos Master oversees resources in the cluster while running slaves to execute tasks; through policies it offers resources to those slaves while framework scheduler selects executors to launch on behalf of policy. Once tasks complete they report back to master; repeat cycle.

With Mesos, developers can gain immediate access to cluster resources for scaling existing services or deploying new ones. Hardware failure is less of a worry thanks to Mesos’ ability to automatically distribute work between nodes within its cluster.

Mesos can support a range of workloads, from microservices and containerized apps, to microservices orchestration and NoSQL databases, supported by popular frameworks like Marathon for container orchestration and Cassandra for NoSQL storage – and large-scale data processing with Spark.

Brief Overview of Kubernetes

Kubernetes, developed and donated to the Cloud Native Computing Foundation by Google, is an open source cluster management platform designed to make container orchestration easier so developers and sysadmins/devops can focus on doing more work. Furthermore, its architecture makes it more powerful, robust, and resilient than monolithic compute platforms found before Kubernetes environments.

Cluster servers consist of one master server and multiple worker servers. The master server serves as the brain of the cluster by offering users API access, monitoring other servers’ health statuses, scheduling tasks according to best practice, and dispersing work among workers.

Worker servers (also called nodes) run a special kernel designed to support Docker containers and stateless workloads, like stateless workloads like NoSQL databases. Their main role is receiving work instructions from their master and carrying them out according to developer-specified specifications. Nodes also allocate unique IPs per pod that translate directly into host ports for flexible networking rules without needing to map container ports back onto host ports – eliminating mapping requirements altogether and providing flexible networking rules for containers. Moreover, each node monitors its health closely, looking out for issues like memory consumption, CPU utilization, I/O usage as well as storage usage issues on each node for each pod in turn.

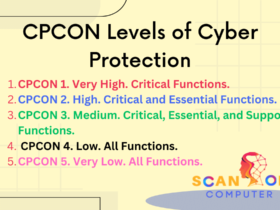

Why Do You Need Container Orchestration Engines?

Managing multi-container applications requires a container orchestration platform to efficiently and reliably navigate the deployment process from initial setup and scheduling through updates and monitoring. Engineers can use such platforms easily deploy their apps across various development/test environments or cloud providers while making sure everything runs as intended.

Container orchestration provides many benefits beyond automating deployment. Not only can it automate deployment, but it can also take advantage of containers’ scalability while managing non-containerized workloads in the same cluster. Furthermore, these solutions also automate many other tasks including resource allocation/balancing/scaling (up and down), service discovery and protection against container/host failures.

Mesos is an ideal platform for building solutions to support both Docker containerized applications and traditional legacy apps, while being easily deployable across cloud providers and data centers. As it is more mature than Kubernetes and our Mesos distribution DC/OS (supported by Mesosphere), deployment and use are quick and simple.

Logging and Monitoring

Kubernetes comes equipped with built-in logging and monitoring features that make troubleshooting easier, as well as safeguarding containerized applications from malware with automatic scanning that identifies viruses and other threats, with quarantining for specific containers or setting default policies across a cluster available as options. Furthermore, Kubernetes also provides load balancing services by creating SRV records which map to their IP addresses and ports on each machine housing your containers.

Mesos is designed with a flexible architecture that enables it to run mission critical workloads such as Java application servers, Jenkins CI Jobs, Apache Spark analytics and Kafka streaming on one platform. It features a fault-tolerant design that enables upgrades on masters and slaves without downtime; additionally it automatically distributes CPU and memory resources across its mesh network according to policies you configure using its web interface.

Though most people associate Mesos with container orchestration, its modular architecture enables it to run different workloads on the same system simultaneously using Marathon orchestration framework. While originally created to handle app archives in Linux cgroup containers, Marathon has since been expanded to support Docker containers as well.

Why We Chose Kubernetes Over Mesos

Kubernetes is easily deployable and easier to manage, making it ideal for small businesses or startups. It provides advanced features like central control, automation and scalability which help reduce downtime while increasing application availability while simultaneously streamlining deployment processes and rollouts.

Mesos is more complex and has a steep learning curve, yet highly customizable and used to run various workloads. Mesos makes an ideal solution for organizations that already run existing applications such as Java applications, Jenkins CI Jobs, Apache Spark analytics or Apache Kafka streaming as it gives organizations the option of interleaving these applications with containerized ones on one cluster.

Additionally, Mesos can be used to deploy stateful services as it includes a built-in persistence mechanism using Mesos agents for local storage on disk. Furthermore, clustering with both master component and slave components ensures high availability. Furthermore, there exists an abundance of open source add-ons and integrations for Mesos that enhance its features further.

Narrowing down Container Orchestration Tools

Though there are various container orchestration tools on the market, you must select one to meet your application needs. When searching for such tools it’s important to find something easy enough to implement with a strong development team providing regular updates.

Docker Swarm, Nomad and Kubernetes are among the most widely-used container orchestration tools. While all three platforms support both containerized workloads as well as non-containerized ones, their setup and usage differ depending on complexity.

Swarm, for instance, is much simpler to set up than Kubernetes but provides less features – making it suitable for organizations that prefer an easier learning curve and/or require a less complex platform for running small applications.

Mesos is more advanced than many of the other container orchestration tools on the market and can handle mission critical workloads such as Java application servers, Jenkins CI Jobs, Apache Spark analytics and Apache Kafka streaming. Furthermore, it works well in public cloud environments and scales up to thousands of nodes for deployment.

About DC/OS and Kubernetes

Kubernetes and Mesos offer similar features, but DC/OS provides an enhanced platform for those needing to manage non-containerized applications at scale with its more mature API allowing for seamless integration into existing systems.

Additionally, OpenShift supports an impressive set of cluster networking features, including dynamic mapping of container ports to host ports and automatic discovery of services using DNS. Furthermore, its network implementation differs significantly from Kubernetes, which requires two networks – one for pods and another for services – for deployment.

Mesosphere’s move toward a pure Kubernetes product has generated considerable attention, yet what really stands out here is how users are turning toward more flexible options as they embrace containers at scale. Similar to when users prefer Chrome or Firefox for enhanced functionality on their MacBook browser, ultimately it comes down to finding what best works for your organization and choosing what best serves their needs.

Leave a Reply

View Comments