Log files provide IT professionals with valuable information that enables them to proactively detect, troubleshoot, and resolve technical issues. Logs also improve network observability, offer transparency and enhance application performance over time.

Log files provide a chronological record of changes made to an application or file; availability logs monitor system uptime and performance; resource logs track connectivity issues or capacity restrictions; and threat logs record any activity matching firewall security profiles.

What are log files?

Log files contain timestamped information that provides context to an event in a system. They may contain structured, semi-structured, or unstructured data depending on their source and type. Server logs offer an unfiltered look at traffic visiting websites such as website referral pages, HTTP status codes, bytes served and more.

Log files are essential components of almost every operating system and piece of software, serving to track antivirus scan results or store general messages. They’re used by virtually every operating system and program for various reasons ranging from antivirus scan results tracking to message storage.

Accessing this valuable data is integral for monitoring security, providing transparency and optimizing performance over time. But to fully realize their potential, logs present several hurdles, such as normalizing, scaling and processing data quickly – this is why centralized log management solutions should form part of any IT environment’s infrastructure; they provide faster troubleshooting, increased availability and superior analytics while helping identify any breaches before they cause extensive damage.

Why are log files important?

Log files record and track events and actions occurring within an IT system in real-time, providing IT teams with insight into its workings at any given moment. This data helps IT teams troubleshoot problems, monitor system performance and security, detect issues as well as trends or threats to help manage systems more effectively.

Log file formats vary depending on the information they collect; change logs display chronological file changes while availability and resource logs provide detailed insights into server uptime, connectivity issues, capacity restrictions and capacity issues.

Organizations seeking to maximize the value of log file data need efficient management. That is why today’s leading cybersecurity solutions automate and centralize log generation, transmission and storage to ensure timely analysis without disrupting production environments. This approach is crucial in helping IT teams quickly detect cyberattacks while simultaneously lowering costs and mitigating impactful security incidents on business operations.

Where do Log Files Come From?

Virtually everything you use on your computer, phone, tablet or server generates log files. From operating systems and applications through games and instant messengers to firewalls and virus scanners, these files document everything from events happening over time to who accessed something when.

ITOps utilize these documents to monitor infrastructure health, ensure applications run smoothly, and rapidly identify any potential issues before they cause downtime. DevOps engineers use them to enhance CI/CD processes while providing a more secure environment by reviewing logs for code and configuration errors while building, deploying, testing and deploying applications. SecOps teams utilize them as tools for threat hunting by looking out for any suspicious activity on blocked and authorized traffic streams.

Servers create log files to record and store all activities related to a website or application in a specified period, for instance one day. These records are known as event or activity logs. To gain insight from this vast amount of data produced by event and activity logs, organizations need an efficient process that collects, aggregates, parses and analyses them efficiently – Graylog makes this easy! Graylog ensures efficient archival logging.

Who Uses Log Files?

Log files record, or “log,” certain events occurring within an operating system or piece of software. They provide invaluable insights that allow anyone to discover issues or errors that would otherwise be hard to spot elsewhere.

Nearly every software application and server keeps a log file, with information ranging from basic error messages to detailed records of server activity.

An access log on a website provides useful data that reveals who visited and when, giving website owners insight into user engagement and content creation strategies.

Logs may store sensitive data such as IP addresses, emails addresses and lawful protected information; therefore they must be created safely.

1. ITOps

IT Operations, or ITOps, is an essential component of modern companies’ IT infrastructures. ITOps teams oversee all IT services offered to their respective users including software applications and cloud resources as well as IT security measures as well as providing technical support and service desk services.

ITOps teams are at the forefront of digital transformation, ensuring technological advances align with organizational objectives. Working closely with business leaders to assess application performance and identify necessary computing, storage and network resources as well as oversee a continuous delivery pipeline – ITOps teams ensure digital transformation succeeds seamlessly.

ITOps teams collect raw data in virtual and nonvirtual environments from multiple sources, normalize it, aggregate it and provide alerts. Furthermore, they utilize automation to analyze this data for patterns, topology, correlations and anomalies which allow ITOps teams to identify dependencies early and reduce manual work by identifying dependencies before they become critical – saving both time and money by eliminating manual processes that waste both manual labor and time. ITOps teams also perform IT disaster recovery planning and testing measures designed to protect data in case a disaster strikes ensuring continuity post catastrophe and installing measures which ensure data protection measures after such an event has taken place.

2. DevOps

Log files provide DevOps with valuable metadata that helps them quickly identify trends and prevent problems before they affect users. With this knowledge, they can make proactive solutions more available such as shutting down servers for maintenance or offering discounts to customers instead of trying to respond later with reactive fixes that cost more money and time than needed.

Log management software makes it possible to centralize log data, making it easy for analysts to search and gain insights. Graylog unifies event and performance monitoring data with high-fidelity alerts for faster response times; additionally, Graylog automates log rotation by automatically renaming, resizing, moving, and deleting old log files to make room for new ones.

Today’s technology landscape generates unprecedented amounts of log data, making centralized log management and security more essential than ever. An ideal solution would automatically detect, correlate, and analyze logs for improved visibility, faster responses, and greater protection from attacks.

3. SecOps/Security

Log files are widely used by systems and software to track errors and important information, from plain text through CSV, XML and JSON storage formats. Analyzing log files provides insight into why certain errors arise as well as ways to prevent future ones.

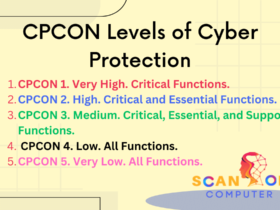

Security teams analyze these files in order to detect and respond to cyberattacks or any unexpected issues that arise. Utilizing specialized software tools, they are able to investigate incidents that take place and ascertain their source – so as to contain and solve problems before any additional damage can occur.

Companies should use audit trails to identify which systems are accessing printers or resources and ensure no one breaches the security infrastructure. Such activities are essential in protecting a company from attacks, while all digital assets remain safe from attacks. They may also help detect patterns in hackers’ behavior that can help establish more robust security infrastructures.

4. IT Analysts

IT Analysts, also known as IT Support analysts, provide technical support services to computer users via email and phone calls. In addition, these individuals possess knowledge about infrastructure security policies as well as software architectures.

IT Analyst professionals utilize application log files to monitor software performance, track security threats and identify any issues that could impact business operations or end users. Logging data helps IT Analyst professionals discover new opportunities and anticipate problems before they occur, improving network observability while meeting real world goals such as reducing costs, increasing IT reliability, meeting compliance and security goals, driving revenue growth or meeting real world compliance obligations.

IT Support Analysts must also be able to quickly correlate events in order to quickly troubleshoot problems. Centralized log management tools help streamline this process by collecting, normalizing, and analyzing the data automatically – this allows IT teams to see trends, patterns, or correlations that would otherwise be difficult or impossible to detect using manual processes alone – such as quickly recognizing that slow running applications could be related to an overloaded server by reviewing past performance log files that contain this data.

What Are Log Files and Why Are They Useful?

Log files provide invaluable insight into how visitors and search engines interact with your website, providing invaluable data that you can use to detect and address potential issues on it.

Typically, they’re available as text files that can be opened using any text editor (for instance Windows Notepad). Machine-readable formats like CSV or XML may also exist.

What are the types of log files?

Every operating system and program uses log files to record events or problems. A log file typically comprises plain text entries with timestamps attached for every entry; they include details about what kind of event occurred, what caused any issues and any other pertinent details.

Log files provide system administrators with invaluable insight into application performance and can identify any areas for improvement. They also help monitor user activity and comply with any governing regulatory requirements.

Log files not only offer essential intelligence, but can also serve as an excellent troubleshooting tool in case of any issues with applications or websites. They can help identify application errors, security risks and explain traffic fluctuations on sites.

Log files are generated by virtually every system and program, though their formats vary significantly. While text-based logs are most often found, CSV, XML, and JSON files also exist that can easily be processed using dedicated software applications.

Event logs

Event logs are local files that store information regarding software and hardware errors, warnings, and events. Administrators and support representatives can use them to identify and resolve systemic issues; additionally they provide an audit trail of security incidents and compliance audits. Event logging provides applications with an efficient method to record important software and hardware events; each event provides its identifier, source location, date/time stamp, message text content and severity level for easy reference.

System events refer to errors and warnings related to the operating system, while application events relate to specific programs – for instance an issue with Microsoft PowerPoint would be recorded here. Security events involve system and data security concerns like login attempts and file deletion attempts, with system administrators choosing which events to log based on their audit policy.

Server event logs provide administrators with detailed information about server uptime and downtime, performance issues, security events and events related to any security-related events that arise on a server. They help identify any server-related issues. Network event logs are generated by routers, switches, firewalls and other network devices and contain traffic volume statistics, errors information as well as events specific to networking systems that can assist administrators in troubleshooting issues with network performance as well as improving overall network performance.

System logs

System logs contain information about an operating system, file system and running applications as well as hardware failures and security events that occur on a system. They’re invaluable resources for troubleshooting system issues and monitoring performance.

Logs can be stored in various directories depending on your operating system and application, for instance in Linux they’re typically kept under /var/log/syslog and to view these logs use tail -f logname> which will display every line written to any specific log file.

Log files are an indispensable asset for monitoring IT operations, troubleshooting issues and assessing security. Unfortunately, their sheer volume can be daunting and requires normalization for maximum value extraction. Thankfully there are various log management solutions that automatically collect and analyze these log files to give an overall view of an IT environment – with cloud-based solutions making scaling and maintenance much simpler – supporting CSV, JSON and key-value pair formats so they can store various forms of data while easily integrating into existing IT tools.

Access logs

Every time you click your mouse, swipe across a screen or answer a phone call, an access log file is being created. These logs show when someone or something attempts to gain entry to restricted systems or files – providing businesses with valuable insight into how their websites are being utilized by visitors.

Logs like these can also help detect security threats; an unusual increase in requests to one IP could indicate that a DDoS attack has taken place, providing website managers with valuable insight that allows them to quickly spot and address potential problems before they escalate further.

Logs typically contain information such as the user’s IP address, request type, filename requested and date/time stamp for every request made by a user. Depending on the exact type of logging done by an organization, additional data points could include browser type or application sending the request; they’re often written out plain text format so readers such as cat or tail (on Linux-based systems) can easily monitor these files as they occur.

Server logs

Servers of all varieties – web and file servers alike – produce log files detailing activities and events taking place on them. Reviewing these logs offers an insightful glimpse of server activity, making troubleshooting much easier. These logs typically include access logs that log information about visitors to websites; agent logs that record client requests; and error logs which detail failed server requests.

Reviewing server logs can be accomplished either manually or using digital tools that reduce the amount of work involved. Log files often exist in plain-text format and can be opened using any text program; web server access logs offer valuable insight into when and by whom a website was visited, including what HTML files or graphic images were requested from it.

However, other server logs can be complex and inconsistently formatted, making them hard to parse and understand. Furthermore, sensitive information contained within log files (e.g. passwords) could potentially be accessible to anyone who can write into their directory – creating an increased security risk. Therefore, it’s advisable to utilize a centralized log management platform.

Change logs

Change logs provide a handy record of changes made in each version of a project, providing both developers and users a way to visually comprehend what has changed and its potential effects.

Updating your change log regularly is crucial to the success of any product or service, as failure to do so could impede its progress. An informative and up-to-date change log makes using your product simpler for users while decreasing bugs or compatibility issues.

A change log is easiest to create using an XML file; however, text or database tables may also work just as effectively in keeping track of changes. No matter the format chosen, keep the change log organized and easy to read for optimal performance.

Creatio allows you to track changes made to your business data through its Change Log feature. To do this, navigate to the page containing your record you wish to review and click “Actions -> View Change Log“. In order to access this feature, the View Change Log system operation permission must first be granted before viewing its results; you can choose specific dates ranges or search by title/contact name to filter results accordingly.

Conclusion

Log files can be useful tools for tracking system activity and performance, detecting security threats and providing visibility into critical metrics that improve business operations. Unfortunately, managing and analyzing them yourself can be time consuming and complex; that is where Graylog comes in handy as a central log management solution that streamlines storage and analysis – giving companies improved business operations through visibility into critical metrics for improved operations management.

These tools enable you to monitor performance on your network in real time and identify any issues before they impact customers or disrupt your business, and thus can quickly identify and address them prior to costly outages or significant downtimes. This allows for reduced outage costs and reduced impact from downtime incidents.

Logging information allows developers to gain a clearer picture of how their applications operate, helping them discover errors in code and ways to eliminate them, as well as any performance problems before they occur, which would otherwise be challenging on an expansive scale without proper tools.

Leave a Reply

View Comments